Artificial intelligence has now become part of our everyday lives, significantly changing our approach to work, communication and decision-making processes. The full potential of AI emerges when it is combined with the scalability and power of cloud computing, allowing complex models to be trained and deployed on a large scale with agility and flexibility.

In this scenario Microsoft Azure is positioned as a leader in democratising access to these technologies,, offering a versatile platform for integrating AI into business processes.

Fundamental concepts: Machine Learning and Deep learning

To understand the potential of artificial intelligence, it is essential to start with its foundations. AI is based on mathematical models and algorithms that are trained. It is a discipline that focuses on the development of systems that can simulate human behaviour.

Among its main components is Machine Learning (ML), a central method by which systems learn from data, improving their understanding without the need for explicit programming. In other words, it enables models to identify connections and trends called patterns within large datasets in order to perform tasks.

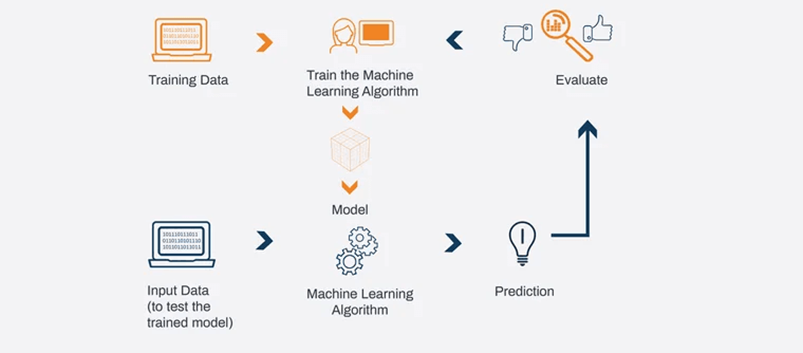

A key aspect of Machine Learning is model training, a process of feeding the system with labelled (or unlabelled) data to ‘teach’ it to make predictions. For example, a model can be trained to recognise spam or non-spam emails by analysing thousands of past examples.

ML applications can be divided into three main categories:, classification, such as distinguishing images of cats from those of dogs, identifying objects, etc.;regression, ,such as predicting the price of a house based on characteristics such as location and square footage; and clustering, such as grouping users with similar behaviour on a website to personalise their experience.

A significant development in the field is Deep Learning, cwhich uses neural networks inspired by the functioning of the human brain. This approach underlies advanced technologies such as speech recognition and Large Language Models (LLM) uch as those used in generative AI systems.

Among the most well-known and currently usedLarge Language Models (LLM) are:

- GPT (Generative Pre-trained Transformer) from OpenAI: uses billions of parameters to answer questions;

- LLaMA from Meta: open-source model designed to be lighter;

- Claude from Anthropic: the philosophy of the model is based on responsible use.

The core success of these models lies in the training and validation process. After training, the model is tested on a separate data set to assess its ability to generate output, a crucial step to avoid the problem of overfitting, where a model only responds well to training data but fails on new data.

Main use cases

The main use cases include:

- Predictions and forecasting: the ability to analyse historical data to predict future events, such as customer behaviour or market demand;

- Anomaly detection: detecting deviations from normal usage patterns, essential for security, fraud monitoring and maintaining complex systems;

- Natural language processing (NLP): processing (NLP): the ability to take text, understand it and respond appropriately, as in translation systems or sentiment analysis;

- Computer vision: he ability to process images and return information, such as in the recognition of objects, faces or situations in images and videos;

- Conversational AI: enabling machines to communicate with humans through fluid and natural conversations, as in chatbots or virtual assistants;

These use cases represent only a part of the incredible possibilities.

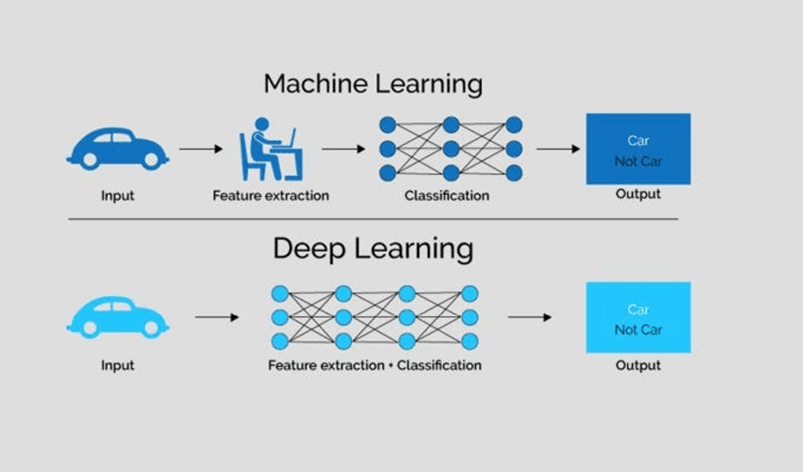

Machine Learning Vs Deep Learning: differences and strengths

Machine Learning, Deep Learning and Natural Language Processing are three fundamental areas of artificial intelligence, but each focuses on different aspects of ‘understanding’ and ‘interacting’ with data.

- Machine Learning (ML) • is a branch of AI that focuses on the development of algorithms capable of

learning from data. - Deep Learning (DL) is a sub-category of machine learning that focuses on the use of deep neural networks, i.e. machine learning models with multiple layers. These models are designed to simulate the functioning of the human brain and are particularly powerful when it comes to working with large volumes of unstructured data, such as images, audio or text. Deep learning underpins technologies such as speech recognition, computer vision (such as facial recognition) and machine translation.

- focuses on the interaction between computers and human language. It can make use of both machine learning and deep learning techniques to improve language understanding.

Then there are other important areas such as Computer Vision which enables computers to ‘see’ and understand the content of images and videos. Others are still being developed such as Quantum Computing, hich uses the principles of quantum mechanics to process information that is not possible with conventional supercomputers.

Machine Learning Models

To create a machine learning model, the first fundamental element is an algorithm, i.e. a set of instructions that the model uses to learn from the data. In simplistic terms, we can imagine a model as a mathematical function, represented by the formula y=f(x), where x represents the input (data) and y the output (expected result).

A central concept in the construction of a model is the role of features (features) and labels (label)within the training dataset.

Features are the attributes or independent variables that describe the data, while labels are the outcome or dependent variable that we want to predict. For example, in a dataset analysing user behaviour, a feature could be ‘time spent on the site’, while the label could be ‘purchase made: yes/no’.

Before the data can be used for learning, it is essential to subject it to a preparation and processing process (ETL: Extract, Transform, Load). During this phase, we inspect the data to ensure that it is clean and ready for use, removing missing values, handling outliers and transforming the information into a suitable format. In addition, we can apply feature engineering techniques to create new features based on existing ones, hereby improving the performance of the model.

The algorithms, once applied to the data, can be distinguished into different learning categories:

- Supervised: the model learns from a labelled dataset, where each input is associated with a known output (e.g. classification or regression).

- Unsupervised: the model works on unlabelled data and tries to find hidden patterns or groups (e.g. clustering);

- Semi-supervised:a combination of the two approaches, in which only part of the data is labelled;

- Reinforcement learning: the model learns through a reward and penalty system, continuously adapting based on feedback (e.g. in games or autonomous driving systems).

What are Transformer?

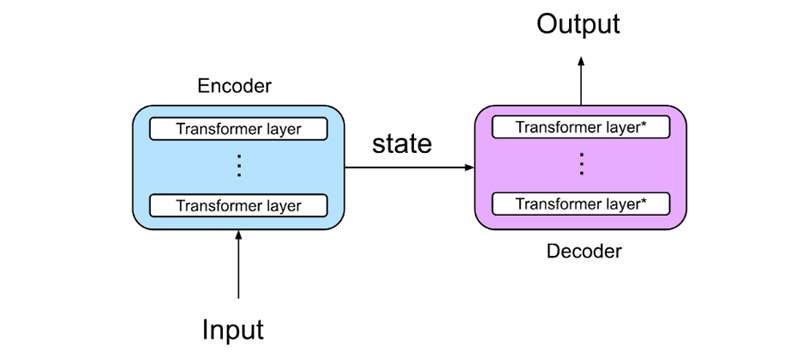

Transformer are a neural network architecture designed to handle sequential data such as text, images or audio) by introducing a mechanism called self-attention, which allows the model to analyse the entire sequence simultaneously and assign a weight to each element relative to the others. They are particularly good at capturing relationships between words, even if they are far apart in a sentence.

Example: Imagine that you are using a Transformer to complete the sentence: “the sky is very…”

- Input: [‘the’, ‘sky’, ‘is’, ‘very’].

- Self-Attention: Each word seeks relationships with the others (e.g. ‘very’ gives attention to ‘sky’)

- Output: The model generates a probable word, such as ‘blue’

This process is repeated word by word, until the entire sentence is completed.

What are Tokens?

tokens are basic units in which a language model, such as a Transformer, breaks down text in order to comprehend (understand) and manipulate it. In a language context, they can represent: words, parts of words, special characters or symbols, sentences or concepts.

When a language model receives text as input, it first breaks it down into tokens..

This process is called tokenizzazione. Each token is then associated with a numerical representation, which the model uses to understand the text sequence. These numerical vectors are then processed by the model (via the attention mechanism in the case of the Transformer) to generate output, such as answers, translations, or predictions.

The models have a maximum length of tokens chey can process, e.g. GPT-3 can process up to 4096 tokens in a single input, while GPT-3.5 Turbo 16k goes up to 16000.

The longer the sequence, the more tokens are used, and this can affect the computational cost and quality of responses.

Example: Imagine you have the sentence: : “Hello how are you?”

A simple tokenization process could break it down into:

- “Hello”

- “,”

- “how”

- “are you”

- “?”

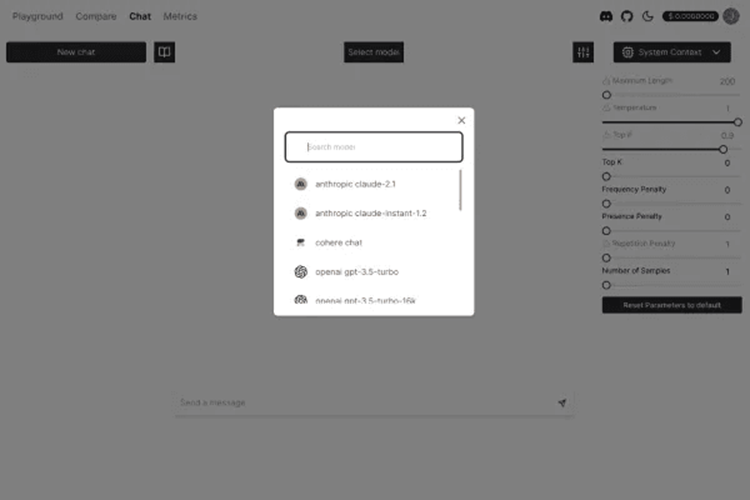

Nat.dev

Nat.dev is a platform designed to facilitate interaction with advanced language models, allowing developers to explore, test and use modern language models. It offers a playground, i.e. an interactive environment that allows customisation of parameters and settings to observe how a model’s behaviour varies according to different variables

Variables and parameters

When interacting with an advanced language model, there are several variables or parameters that we can modify to influence the behaviour and results generated in order to achieve more creative, consistent, or tailored responses.

temperatura s one of the main parameters to control the creativity and randomness of responses. A lower temperature (e.g. 0.2) makes the model more deterministic and precise, while a higher temperature (e.g. 1.0 or higher) makes responsesmore creative, unpredictable and diverse..

Top-p(also known as ‘nucleus sampling’) is another creativity control parameter, which determines the cumulative probability from which the model draws responses.A low top-p (e.g. 0.2) for more predictable answers.

A high top-p (e.g. 0.9) to increase variety in responses.

The frequency penalty for the repetition of words or phrases. Increasing this parameter reduces the probability that the model repeats the same words or concepts redundantly. A higher value increases this penalty.

The presence penalty discourages the use of words that have already appeared in the context of the conversation. The higher the value, the less likely the model will repeat topics or words that have already been used.

Stop sequences tell the model when to stop generating a response. You can define one or more text sequences that, if generated by the model, will make it stop immediately. This is useful to avoid excessively long or unwanted responses.

Logit bias is a system that allows you to change the probability of certain words or phrases being chosen by the model. It allows the model to be forced to favour (or avoid) specific words in its output by altering the logit (the measure of probability) of certain choices.

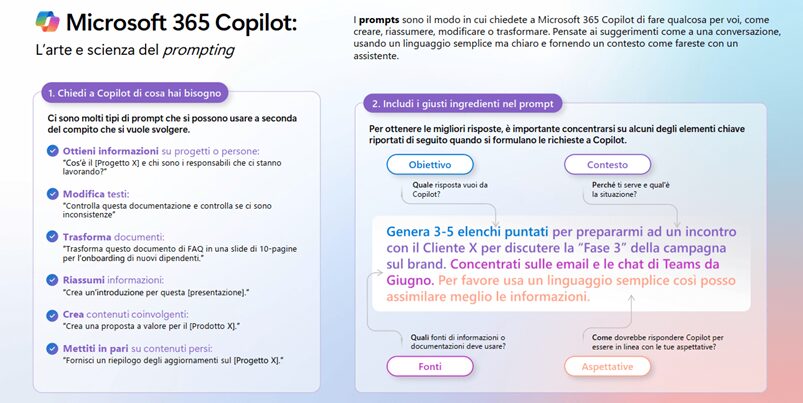

Prompt

Il promptis undoubtedly one of the most crucial elements when interacting with a model. It is the text (the question) we provide as input, and its importance cannot be underestimated as it completely guides the answer we get (it acts as a directive). If you provide an ambiguous or generic prompt, the answer will probably be just as generic. But if the prompt is precise and clear, the response will be detailed, relevant and useful. You can use the prompt to influence the tone and style of the response. A poorly formulated prompt may lead to incorrect or irrelevant results.

An example of how a well-formulated prompt affects the final result can be seen in text-to-image models such asMidjourney. Here, the quality of the prompt directly determines the visual output. Unlike language models, where we evaluate consistency and tone, in text-to-image the link between prompt and image is immediate and visible.

Azure Services

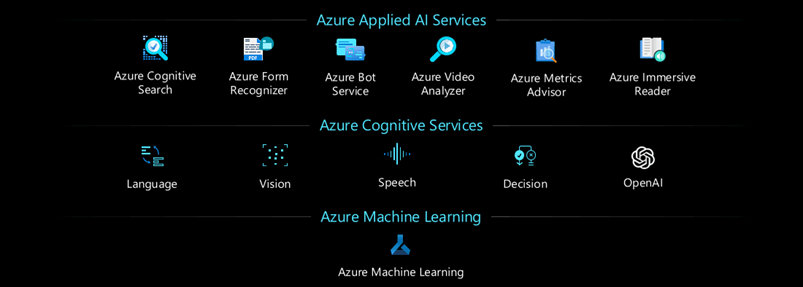

Microsoft Azure offers a wide range of cloud services that enable companies to implement intelligent solutions by leveraging artificial intelligence and machine learning. These services are part of an ecosystem that includes everything from data management to model training to application deployment.

Two main solutions stand out among them:

- Azure Machine Learning

- Azure Cognitive Services

The differences between the two solutions focus mainly on the approach taken to implementing artificial intelligence, the possibility of customisation and the level of control allowed over algorithms and models.

Azure Cognitive Services is a set of APIs and AI services pre-built by Microsoft (it is not possible to customise them for specific use cases except in a limited way). These services allow developers to add artificial intelligence to applications without having to build or train models. It includes natural language recognition, computer vision, speech recognition and text sentiment analysis. It is useful for projects that require standard functionality such as chat-bots.

Azure Machine Learning s a managed platform for developing, training and deploying complex machine learning models. It is ideal for data scientists and developers who want to create customised models with advanced algorithms. You can choose the algorithms to use, define the workflow, and train models on specific datasets. It provides complete control over algorithms, workflow and model training.

CCreation and use of the AML service

The creation of the service in the Azure portal is a process involving several steps:

- The first step is the creation of a workspace,workspace that acts as a container for all objects, data, models, etc. It represents the central point where all activities will be organised;

- The creation of a workspace requires a number of additional resources to efficiently manage data, security and monitoring of operations: Azure Key Vault, Azure Storage Account, Azure Container Registry, Application Insights. These resources are configured automatically or manually during the creation of the environment.

Once the workspace has been set up, the next step is to open Azure Machine Learning Studio, a graphical platform for visual and interactive creation and management.

The studio allows you to easily upload data or use existing samples, which you can divide into sets such as training (70 per cent) and test/validation (30 per cent).

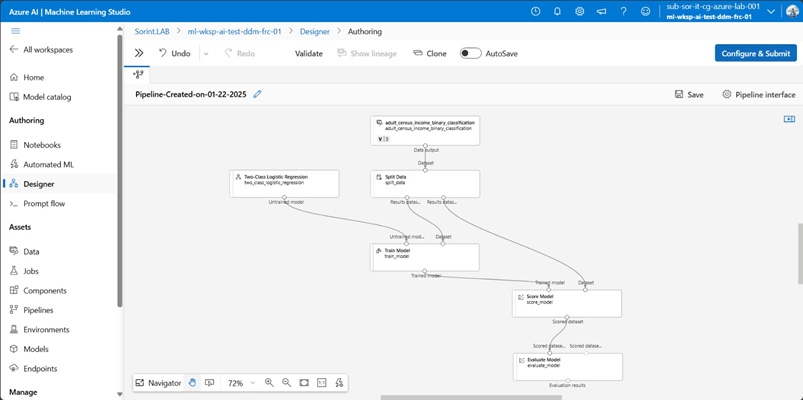

The Designer is one of the most powerful features as, with a no-code, drag-and-drop approach, it allows you to build the flow of the model by governing its entire life cycle.

With the flow built, the model can be trained, and once finished, tested and validated. The system also allows real-time monitoring of metrics such as accuracy and recall for classification models and other performance parameters.

Once the model is ready, the next step is deployment, using the various options of Azure Compute:

- Azure Kubernetes Service (AKS): If you want a scalable and highly available environment;

- Azure Container Instances (ACI): A simpler and lighter alternative, if your model does not need scalability or complex management;

- Edge Computing:In some cases, you may want to implement your model directly on edge devices , IoT)

Service endpoints

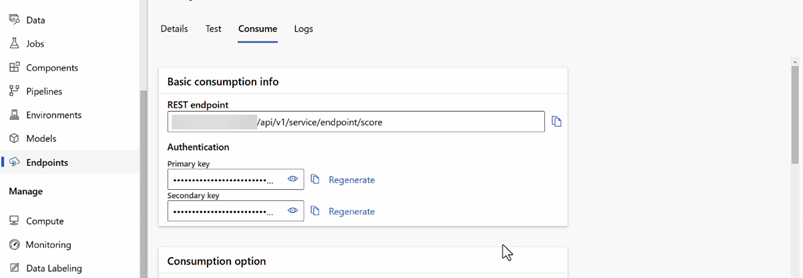

When it comes to AI Services in Azure, one of the key aspects concerns interaction with services via endpoints. An endpoint. represents a specific URL that allows a service to be invoked an API call.. Requests are sent to such URLs to obtain or send data, such as images, text or input parameters.

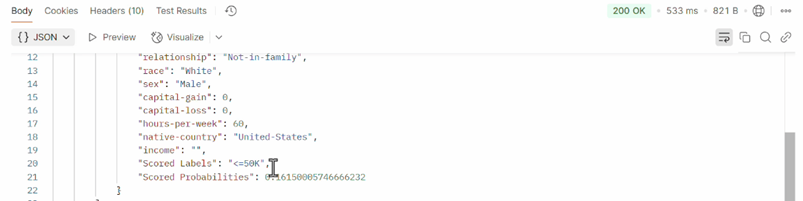

Once retrieved, it can be tested via a REST request. The most commonly used tools for testing and interacting with RESTful APIs are Postman or cURL..

Interacting via Postman

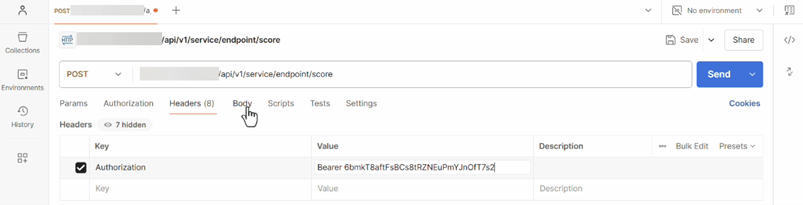

In the specific case of a POST, call, we are passing information to the service in order to obtain a processed response. This is the most common way when working with data that needs to be processed, such as text or images.

In order to send such a request, we have to configure two parts correctly:

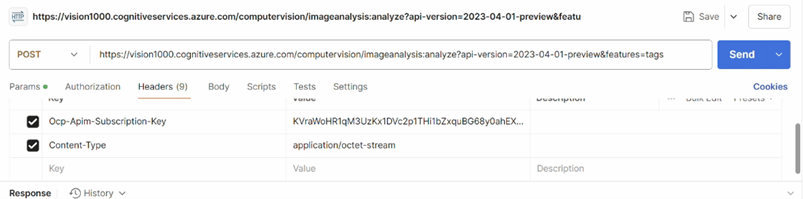

- Header: with the necessary authentication information, we use a bearer token representing our access permission to the service generated during the creation of the service;

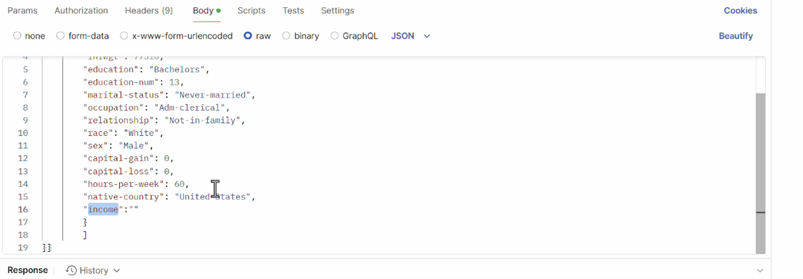

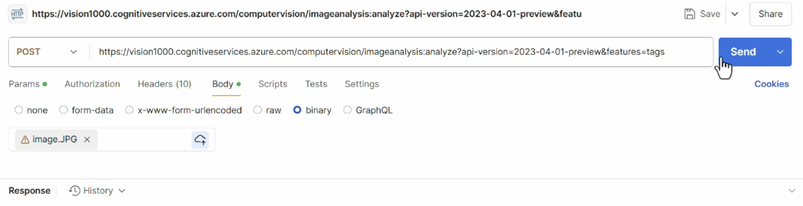

- Body: we must specify the data we want to send to the service, for this type of service we must use the JSON format, the body must be a string that contains the information needed to process the request.

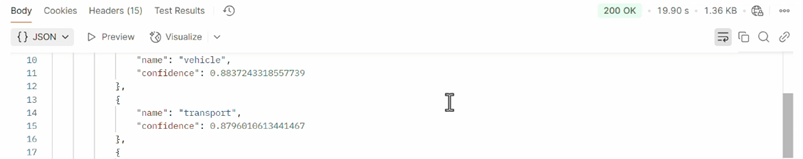

We can click on the Send button to send our call. If everything has been configured correctly, we will receive an HTTP 200 OKResponse, which means that the service has successfully processed the reques.

The response will contain the data processed by the service:

If there is an error, such as an invalid authentication token or an error in the request format, we will receive an HTTP error code, e.g. a400 Bad Request or a 401 Unauthorized.

Azure AI Foundry

Azure AI Foundry is a comprehensive platform that bundles and integrates with all services for the advanced development of artificial intelligence-based applications.

The platform facilitates collaboration between development, data science and business teams by enabling project management, model performance monitoring and debugging from a Hub.

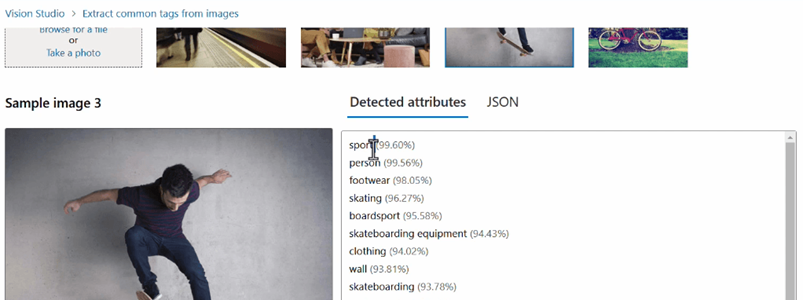

Example of a computer vision service

A practical example of the use of Azure’s Computer Vision services is the Azure Computer Vision API, which analyses images.

This service can be used in several ways: via the graphical interface offered by Azure AI Vision Studio or via the new Azure AI Foundry.

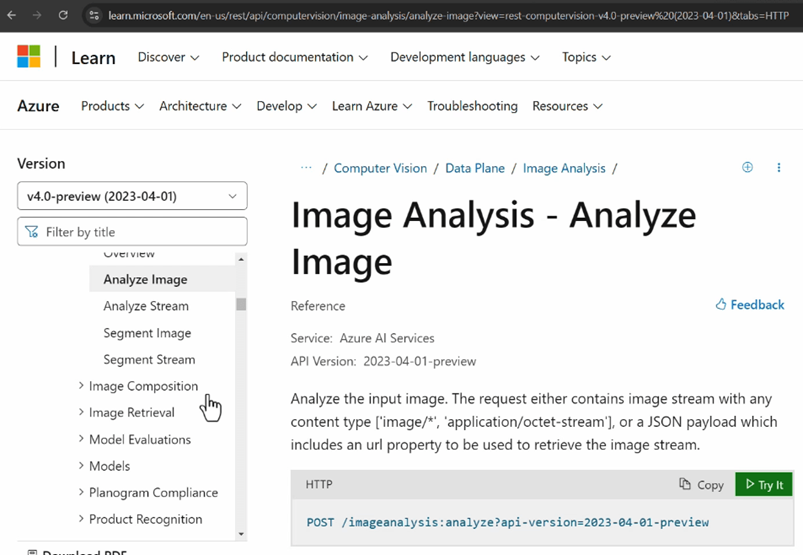

Ogni servizio di Azure dispone di una documentazione dettagliata.

API reference service provides the information needed to compose requests, including:

- Endpoint URL

- Method HTTP

- Header and Body

- Format and input

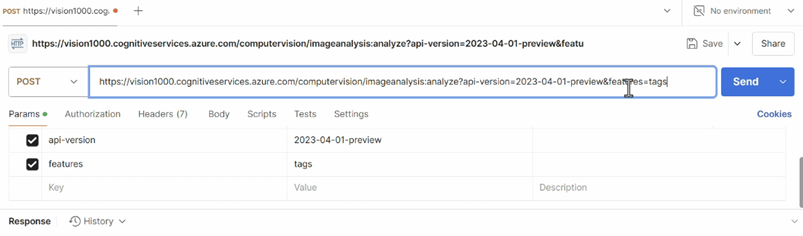

We then test the operation of this API using the Postman tool again:

Innovation with Microsoft Azure AI available for all

As we read in this blog post, Microsoft Azure AI represents a significant breakthrough in the artificial intelligence landscape, offering cutting-edge olutions that democratise access to advanced technologies. With its ability to integrate AI into business processes, Azure AI enables businesses of all sizes to harness the potential of cloud computing to improve operational efficiency and drive innovation. With tools such as Azure Machine Learning and Azure Cognitive Services, businesses can meet the challenges of the future with greater agility and precision, turning complex data into strategic insights.